Question About robots.txt

-

Hi. I made my blog public again instead of private a little while ago and on Google it still says that a description of this site cannot be displayed because of the site’s robots.txt.

Does anybody know how long it takes for the Google description to be updated?

Thanks,

TomThe blog I need help with is: (visible only to logged in users)

-

Hi Tom,

Have you published new content since the site became public? I’m asking because it can take weeks to have it indexed https://en.support.wordpress.com/search-engines/

If you’ve recently made changes to a URL on your site, you can update your web page in Google Search with the Submit to Index function of the Fetch as Google tool. This function allows you to ask Google to crawl and index your URL. See here https://support.google.com/webmasters/answer/6065812?hl=en

-

Hi timethief.

I’ve published a new post since making the site public, yes. I will wait for 2 weeks and see what happens. Do you think it’s worth using the Submit to Index function as well?

Thanks,

Tom -

I’m sorry but I’m not going to make that call for you. If it were me I would be publishing at least 2 – 3 times weekly for about 4 weeks before I asked for Google to crawl my site after removing the privacy setting.

-

-

-

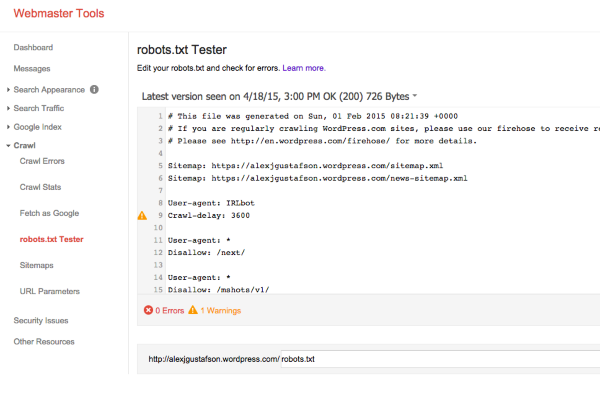

Hi timethief, I don’t know if this is normal or not but this is what the robots.txt for my website says:

# This file was generated on Tue, 14 Apr 2015 20:58:32 +0000 # If you are regularly crawling WordPress.com sites, please use our firehose to receive real-time push updates instead. # Please see http://en.wordpress.com/firehose/ for more details. Sitemap: https://technoteamblog.wordpress.com/sitemap.xml Sitemap: https://technoteamblog.wordpress.com/news-sitemap.xml User-agent: IRLbot Crawl-delay: 3600 User-agent: * Disallow: /next/ User-agent: * Disallow: /mshots/v1/ # har har User-agent: * Disallow: /activate/ User-agent: * Disallow: /public.api/ # MT refugees User-agent: * Disallow: /cgi-bin/ User-agent: * Disallow: /wp-login.php User-agent: * Disallow: /wp-admin/And when I ask Google to crawl my website on Google Webmaster Tools, there is an error as it says “Disallow” in my site’s robots.txt.

Thanks,

Tom -

Hi Tom,

Those are all normal settings for robots.txt — you’ll notice they’re all pages having to do with WordPress administration, and not your sites actual content.

And when I ask Google to crawl my website on Google Webmaster Tools, there is an error as it says “Disallow” in my site’s robots.txt.

I would expect to see the error for those pages mentioned with Disallow, but there should be no errors associated with re-crawling your Posts and Pages included in the sitemap.

Did the errors appear for those posts and pages not marked as disallow?

-Alex G.

-

Hi Alex. Thanks for your reply.

I’m not really sure. I’ve asked Google to crawl my site but it never seems to go through and just highlights “Disallow” in red. Not sure why.

Thanks,

Tom -

-

Hi Tom,

I guess could you clarify where you see the error then?

I just don’t see any errors regarding the disallow at all on my site:

Crawl delay: 3600 also highlights in yellow.

This highlights yellow in Google Webmaster Tools because Googlebot ignores this rule. Other crawlers respect though.

-Alex G.

- The topic ‘Question About robots.txt’ is closed to new replies.